Recipe to build large scale web apps

Ingredients that make your web app scale globally.

Photo by Markus Spiske on Unsplash

Table of contents

Today web apps are developed considering global user-base, so they need to scale fast without disruption, to serve global traffic. This post explores the architectural as well as infrastructural needs to scale any web app.

Brief history of web apps

Anyone who has worked in software development since last decade or so must have observed a drastic change in the way software is being built today. In early days of Internet, we had more of static websites and gradually taken over by what we used to call as dynamic websites backed up by a database. There was no concept of Web API’s, in memory databases or its likes, just a plain HTML website. In my view, the first real what I would call a dynamic website was a search engine or an online directory (yellow pages). Search engine would accept few keywords and return results at the click of a button. We had qualified a Search Engine as a more of a dynamic web app as it had some kind of logic sitting behind with a user interface at the front accessed via a browser. From that era of heavy backend logic apps, we are here where a significant amount of intelligent App logic is implemented on a front-end supported by majority of the frameworks / libraries in the present times along with backend - think of admin dashboards, web based e-mail clients, front-end of social network - all of these involve a lot of User Interface elements, several user interactions and UI changes as a result of those interactions. All this evolutionary phase for web development was so profound that it has almost replaced the need to have a Desktop enterprise Apps. Things are evolved so fast that now even web apps are being outdated by the new world of Hybrid Apps and PWAs ( Progressive Web Apps ).

Problem & solution of scaling-up

Systems that are rigid (complex and tightly coupled components), monolithic (all in one) and are built with no clear demarcation of functional components fail to scale up. In general, to scale up any system, we need to build a system that is modular (think of plug-n-play components), loosely coupled and flexible (react to changes). This principal is not specific to software systems, but to all kind of systems - think of factories, airports, hospitals, government / political systems. The more you observe how real world systems work, the better system designer you can be and software system design is not in anyway different from it.

To scale up software systems, we need to think of components from two different perspectives architectural and deployment infrastructure perspectives. Let’s cover each of these in detail.

Architectural considerations

Architecturally scale-able applications should have smaller, reusable and independent components that are loosely couples (free to move around in larger system design). Think of how small Lego pieces of different shapes and colors are used to build something big and meaningful. Same way software systems should be build with small components that have plug-n-play interfaces.

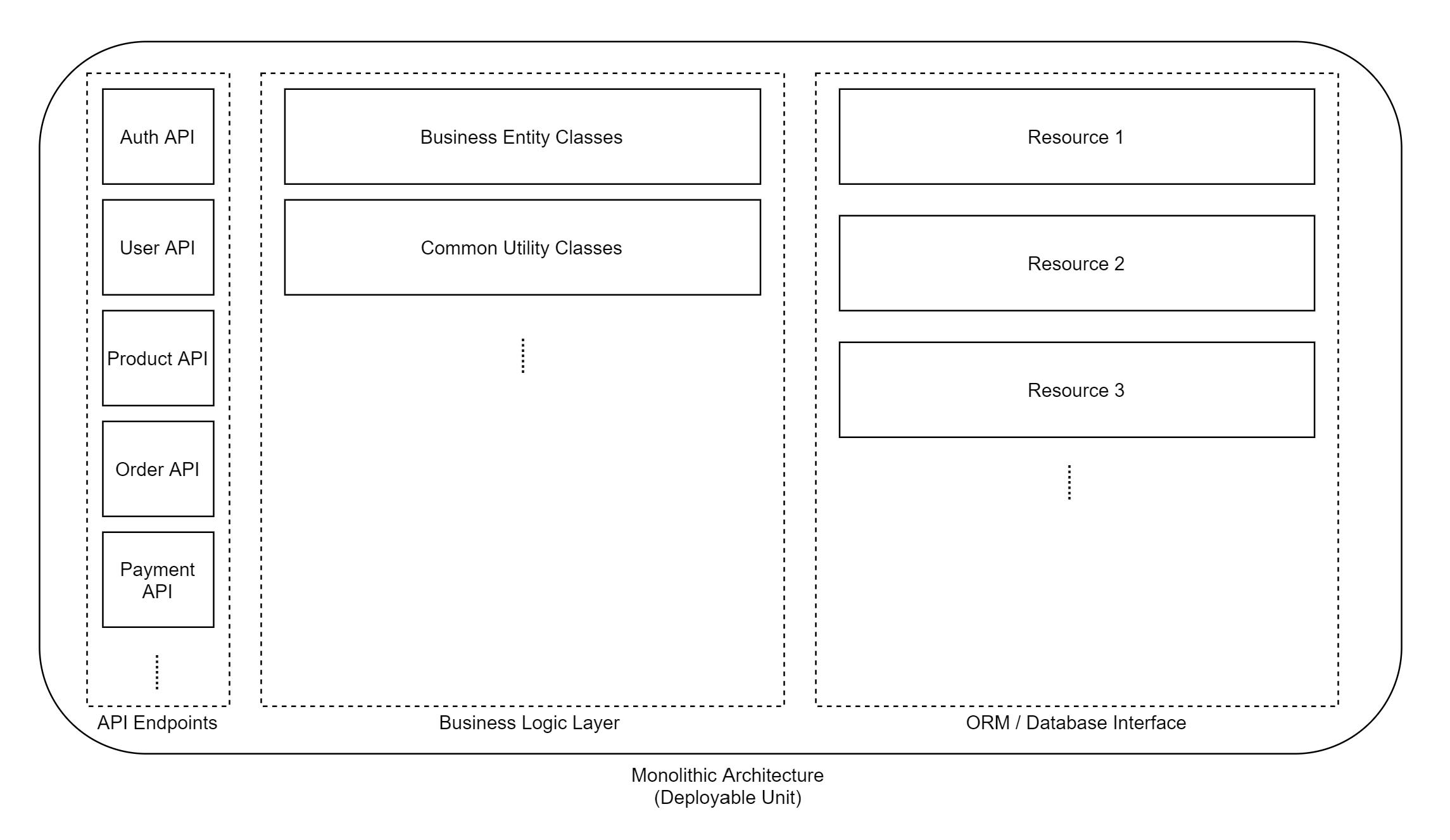

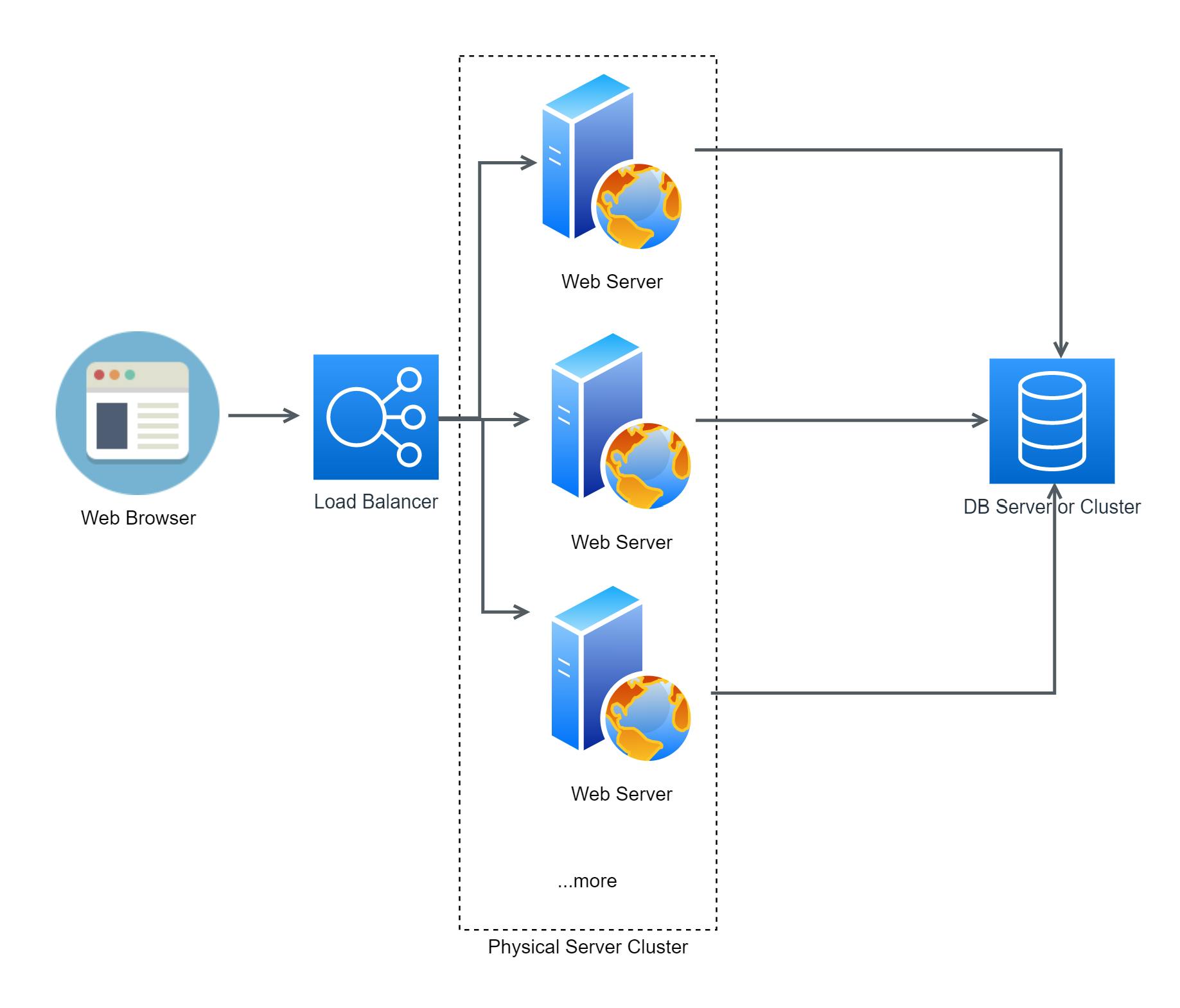

Any web app can be logically divided into mainly three tiers - Front-end (UI/browser), Middleware (app server) and Database server. Earlier Middleware server layer used to be monolithic (all app logic as single deployable unit). To scale the traditional web app, we used to deploy multiple instances of whole monolithic app either on physical or virtual machines. This was not optimal and great way to scale. This was traditional way of building app ( monolithic architecture ). To explain it better, think of an app having few services - Authentication, User, Product, Order etc. In a monolithic architecture, single deployable unit will have whole app (middleware server), including all the services. If another instance is needed, , the entire app needs to be deployed (monolithic way). Single unit of deployment in monolithic app looks like below.

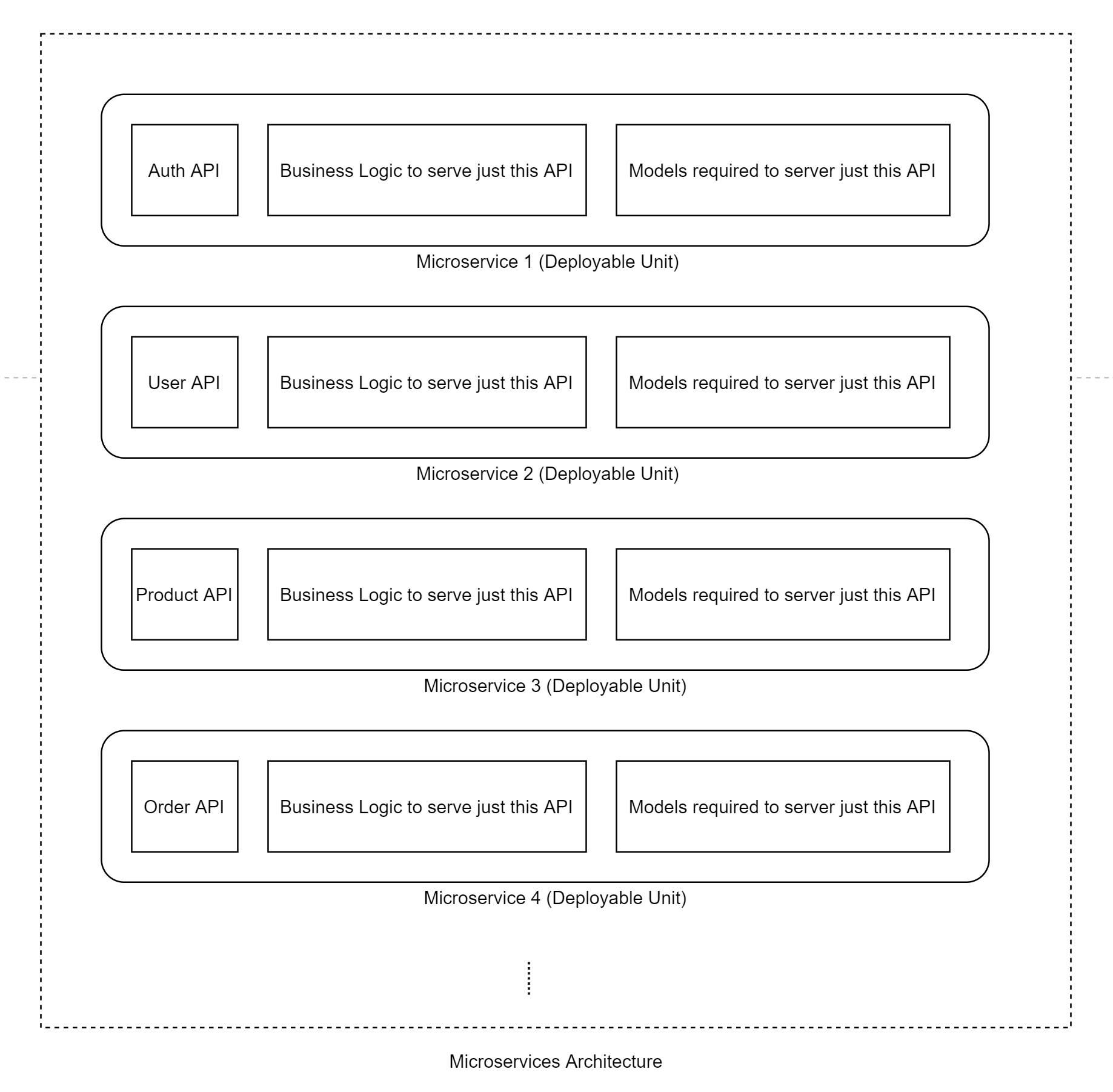

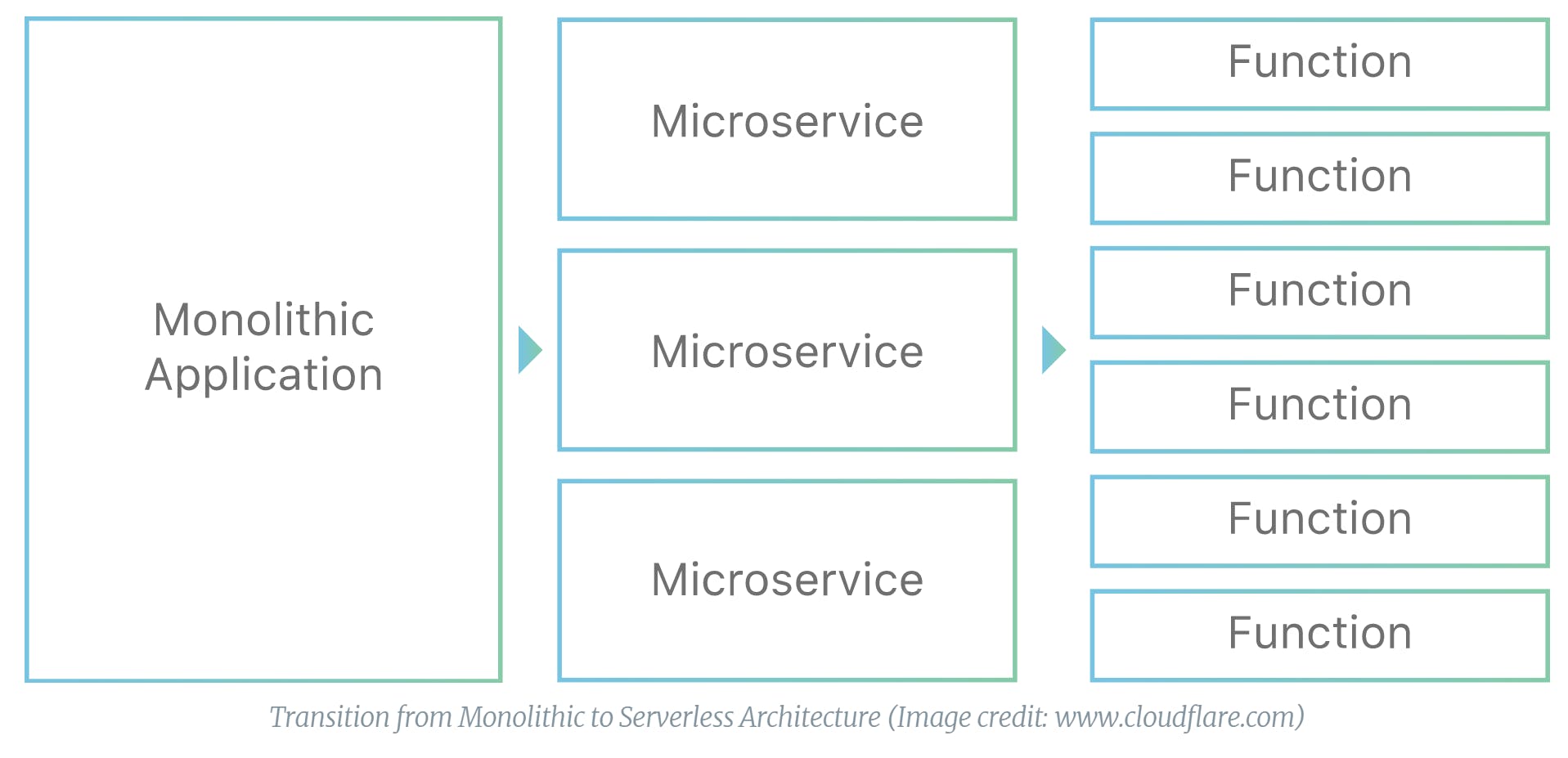

If one or more services are used more in application as compared to others, then why we need to deploy less used services with each deployment (app instance) - no point of scaling the service which is hardly or less used. We need to scale up the components of app that are more used (get more web traffic). For instance, Order service would not be as heavily loaded as Product service. Order service will be used only by logged in users who wants to buy a product, where as the product service will be used by both logged-in and non-logged-in users to view the product details on product page. With this understanding, we should have made each service as independent and loosely couple unit of deployment. This is known as microservices architecture , where each service (logical unit of business function) is a independently deployable unit. Below diagram shows the same.

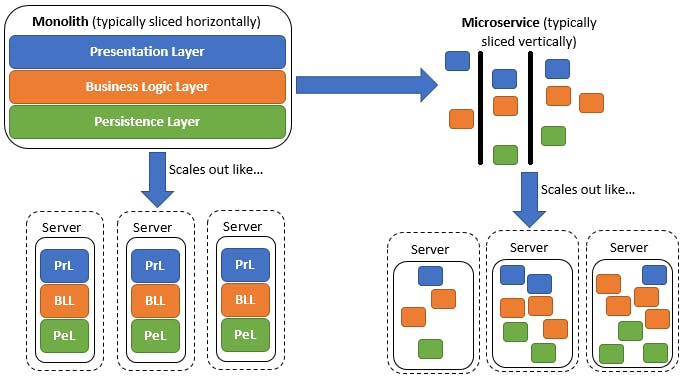

Below diagram sums-up the difference between a monolithic and microservices architecture. Here you can see, in monolithic architecture everything is packaged in every single instance of deployment, whereas in Microservices based architecture, the application is broken down into small functional units (called microservices) and each of those can be deployed separately based on needs for scaling up.

Same as Middleware (server app), a Database layer also can be scaled up/down. To make database scale-able, it needs to be running on cluster of nodes, all nodes being configured as active-active with data getting spread?? over all nodes. Cluster management involves scaling up the cluster size by including new nodes in the cluster without any downtime. Generally, while architecting a scale-able app, a distributed database is chosen and run in active-active cluster.

Deployment infrastructure considerations

Along with application architecture, the underlining infrastructure plays a huge role in making the app truly scale-able. Even the well designed, microservices based web app can not scale if the underlining deployment infrastructure is not scale-able. In this section I will talk more about deployment strategy. Technology and tools for deployment and managing infra has changed drastically in last few years, mainly in last 15 years. Below diagram shows the snapshot of change from traditional way of deployment on physical servers to virtualized deployments to containerized deployments.

Deployment on virtualized environment

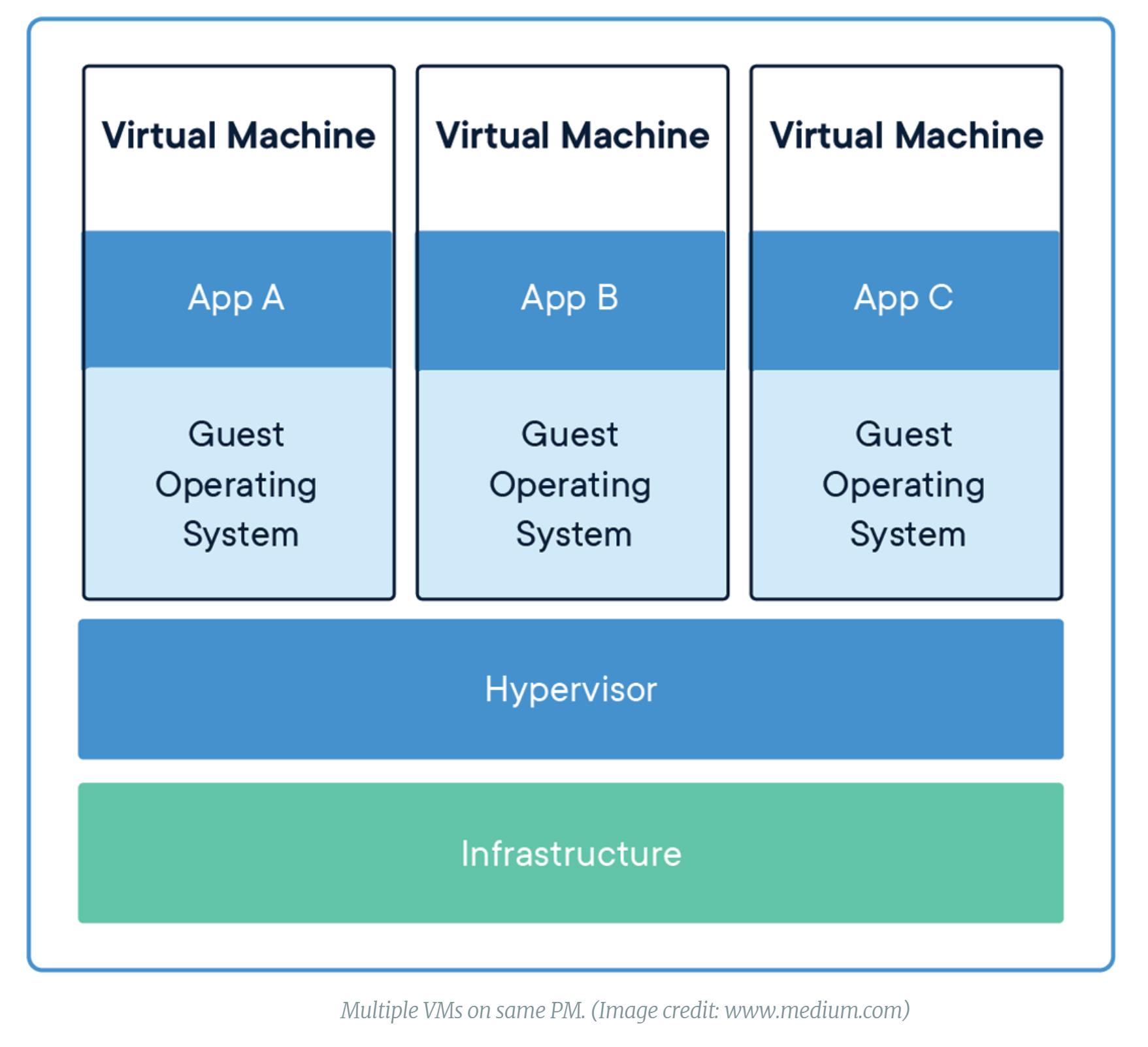

Then came the era of VMs (Virtual Machines). Some of the key advantages of virtualizing the infrastructure was better resource utilization and on-demand scaling. One Physical machine can now host multiple virtual machines (VM) based on the resource requirements for a VM. Multiple VM’s can spun up based on the needs resulting in optimum utilization of the infrastructure. As the demand for resources grow, the underlying VMs management software spins up VMs as required or defined in its configuration, hosting the app instance and bring them up on network almost instantaneously. From the network topology stand-point, virtualized deployment schema looks similar to physical deployment schema, except that physical machine can now host multiple virtual machines. Below diagram is a depiction of how multiple VMs are hosted on single physical machine.

Containerized deployment

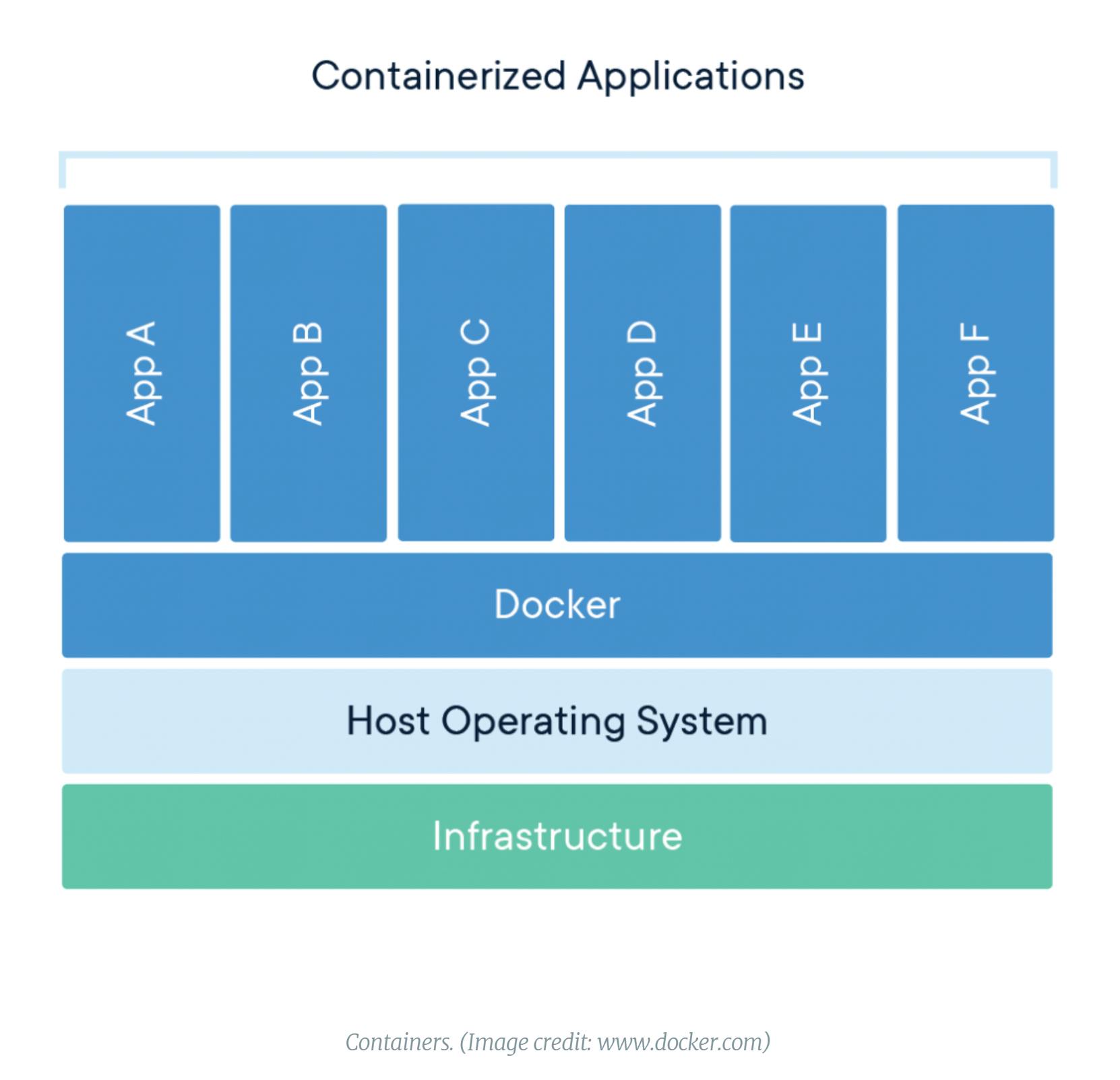

Virtualizing the infrastructure was one step forward in bringing efficiencies but they are still resource intensive when compared with Containers. In this section, I will talk about Containers. Talking about VMs – they require space and memory for Guest OS, common utilities in addition to web app and its dependent packages. Containerization has given us even better ways of bringing efficiencies in our infrastructure. In layman terms, a Container is packaging of app along with its dependent packages / libraries, without any overload of guest OS and other low level stack. You can think of a container as a light-weight VM, that boots fast and make the app instance available in a matter of seconds. Below diagram depicts multiple Containers hosted on a single physical machine. Docker , Singularity and Podman are some examples of popular containerization software that helps manage life-cycle of a container.

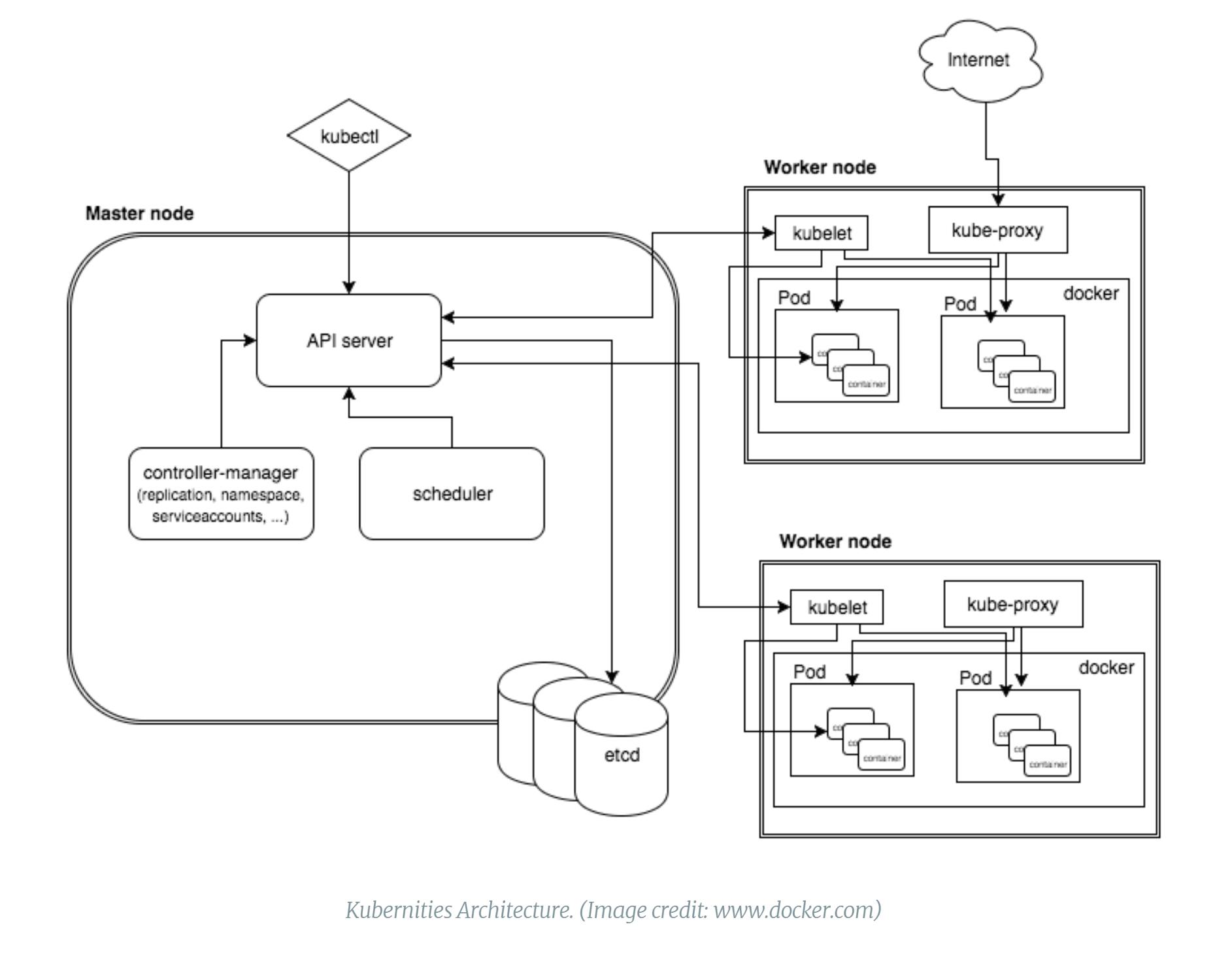

To manage multiple containers and ensure that they scale up and down as and when required, another cluster aware software is used - in general term that is known as container orchestration software. Kubernetes is one of the popular container orchestration cluster management software. It ensure that the given cluster configuration is always maintained in run time. Below diagram shows high-level architecture of Kubernetes and how it manages the pods (run time container image) using Docker. In the below diagram, Node represents a single physical or virtual machine. Master node is a management / admin node in cluster and worker nodes / machines are compute nodes (hosts for pods), each one having a container management software (Docker in this case) installed on it. Docker on each worker node is responsible to manage the life-cycle of containers on that node. Kubernetes talk to Docker through kubelet (you can think of them as agent components). They relay commands from API server to Docker on given node. In case you want to learn more about containers and container orchestration and how they compliment each other, watch this short video about Dockers and Kubernetes.

After going through all of the above, we can easily see how the deployment infrastructure has changed over period and how reactive and scale-able the deployment infrastructure has become, leveraging the cluster capabilities.

###Serverless OR Function as a Service (FaaS) deployment

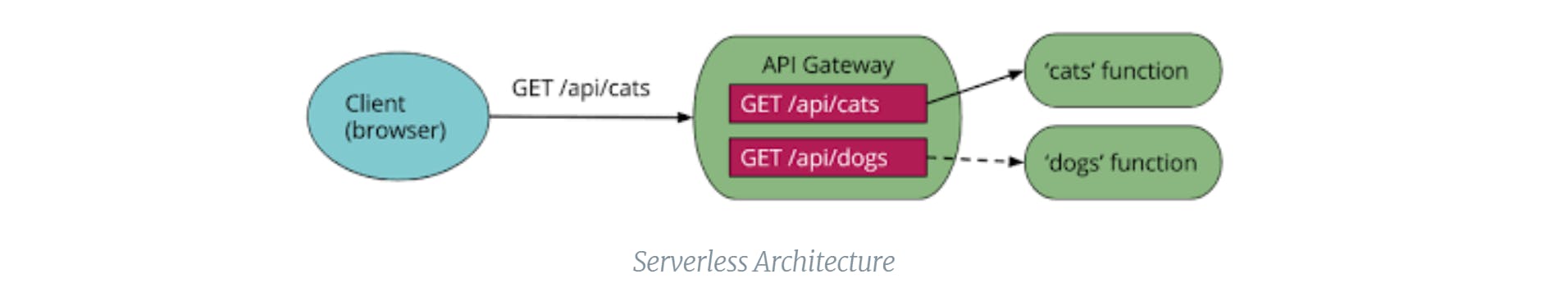

Serverless deployment approach is relatively new approach and now things are moving from containerized deployments to serverless deployments. The key difference between both is how we build and package our app. I am not sure, but the underlining infrastructure of serverless deployments may be containers only. In serverless deployment, we need not to manage any server. As an app team, we don’t even need to know anything about where the servers are, what are their IPs or hostnames etc.; everything is taken care by deployment service provider.

In serverless architecture, the deployment unit is an entry point of an app . You can think of an entry point of an app as any callable point in app to render a page or accept / return data (API). It’s further smaller unit that microservice.

Advantages of Serverless over containerized deployments:

- Deployments are agnostic to underlining heterogeneous infrastructure.

- As a developer, one need not to maintain or configure any servers / containers.

- Deployment unit is much smaller as compared to containers. In serverless, deployment unit is a function (an entry point for API, page etc.), where as in containerized deployments, unit of deployment is container, which may include whole app (middleware logic) or a microservice.

- As deployment unit is function (logical flow), so the change to one function does not require deployment of whole app or microservice, only the changes function is built and re-deployed. This result in much easier and faster change management.

- Most of the cloud providers charge based on function invocations - that means you are charged based on the compute time used to serve request, where as for containers they charge based on uptime of container. As a result, your cost of running app in serverless would normally be less as compared to app running in containers, unless you app is too popular and serving with full load 24x7.

Disadvantages of Servicerless when compared to containerized deployments:

- Deployments are kind of blackbox. You don’t know where your code lives, so you loose sense of control.

- Hard to debug in production as you can not login to any server and see what went wrong. You app level logging should save you here.

Summary

To make your web app scale-able, you need to work on both application architecture and deployment infrastructure. Key take aways:

- Deployable unit should be small (microservices or functions) and independent of other units (functional block).

- Deployable units / components should be loosely couple through APIs exposed by each component.

- Deployment infrastructure should be clustered, so that it can scale up and down on need basis.

- Use container and container orchestration or serveless approach to deploy an app, so that infrastructure can respond to scaling needs in real-time. If you reached till this point, kudos to your reading interest. I know, no one like to read long articles, but frankly speaking, when I started writing this article, I did not plan to make it so big. I will try to keep my future articles short and crisp. I hope this article helped shape and clear some concepts in your mind. Feel free to reach me @gaurav_dhiman or at my website.